No need to run from the robots: Nobel laureate Daron Acemoglu talks to MoneyWeek

Daron Acemoglu, Nobel Prize winner and professor at MIT tells Matthew Partridge why the gains from AI have been overhyped

Get the latest financial news, insights and expert analysis from our award-winning MoneyWeek team, to help you understand what really matters when it comes to your finances.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Matthew Partridge: Many people have produced very high estimates of the productivity gains from AI. Some think it will boost total US GDP by 7% over ten years, while others even project that the annual GDP growth rate will go up by several percentage points.

Yet your recent article,“The simple macroeconomics of AI”, estimates that the cumulative gains from AI over the next decade will be much more modest – an extra 0.6%-0.8% on US total factor productivity (the increase to output that you get without any extra inputs of labour or capital). That implies an increase in America’s overall GDP of a total of 1.5% by the end of the period.

Daron Acemoglu: Any predictions, including my own, are going to be hugely uncertain, as everybody’s making lots of assumptions. If what some of the industry insiders are claiming about General Intelligence (GI, a computer able to reason in a way that a human can) coming around in two or three years’ time is true, my figures would turn out to be massive underestimates.

Try 6 free issues of MoneyWeek today

Get unparalleled financial insight, analysis and expert opinion you can profit from.

Sign up to Money Morning

Don't miss the latest investment and personal finances news, market analysis, plus money-saving tips with our free twice-daily newsletter

Don't miss the latest investment and personal finances news, market analysis, plus money-saving tips with our free twice-daily newsletter

But assuming that AI keeps developing the way it has for the last two years, and GI doesn’t materialise, then my view is that pretty much anything that involves manual work, such as construction or cleaning and maintenance, will be unaffected. There is no way the AI models are good enough right now to be incorporated into robotics, and the advances in flexible robotics needed to replace these jobs will take at least ten years.

Similarly, CEOs, politicians and civil servants are still going to keep their jobs. What’s more, people who are engaged in very high levels of social interaction, such as entertainers and psychiatrists, are largely also going to be unaffected. That leaves the remaining 20% of the economy, comprising cognitive tasks in stable environments such as offices, which could either be replaced by AI, or at least augmented by it, when it comes to preparing reports, inventory accounting and the like.

The problem is that if past technologies have anything to teach us, it is that even those tasks that can be taken over by AI will be slow to replace because of the cost. So I estimate that a quarter of the 20%, 5%, is going to be heavily affected by AI within the next decade. Now, all the research done in laboratories and real-world settings suggests that even there the cost savings will probably amount to 15%. So, 15% of 5% is equal to a boost to total factor productivity of around 0.75%.

If someone can prove that I’m wrong, I’m happy for them to do so, but they will either have to find a much bigger cost saving or tell me why more workers than the 5% that I’ve mentioned will be affected. Or, if you still want to say the impact of AI is going to be greater than I’m projecting, you’re going to have to make the case that AI is going to do something revolutionary like cure all cancer.

Matthew Partridge: Over the past month, we’ve had two big AI stories: the investment of $500 billion by US firms through the Stargate Project, and the unveiling of DeepSeek, which claims to perform at the level of the best existing AI models for a fraction of the development costs. Do these developments change your views at all?

Daron Acemoglu: I think people are reading too much into Stargate, because even before it was announced the planned investments in the AI sector were massive, and they are going to continue that way, which is all part of the hype. DeepSeek is interesting because it can be read in two ways. You can say that it was a major breakthrough, and if you build on it with more data and more chips, you will be able to accelerate things, which reflects the “scale is everything” view held by OpenAI and many Western companies.

However, I don’t agree with this view. I think that DeepSeek instead shows that the model of putting as many graphics processing units (GPUs, electronic circuits that process data) together as you can is completely the wrong approach. Instead, DeepSeek, through clever engineering tricks, has shown that it is the software, not the hardware, that matters.

What DeepSeek claims to have done isn’t that different from what the machine-learning community have already been talking about for the last 30 years. It has just put all of the techniques together, and in the process shown how Silicon Valley’s obsession with scale and hardware has made it “rotten” with inefficiency.

Matthew Partridge: So does DeepSeek change your assessment about potential gains from AI?

Daron Acemoglu: Well, DeepSeek itself, like most of the Silicon Valley companies, is aiming to produce a GI, and thinks that it is feasible. And if it does then I’ll probably have to rewrite my estimates. But while some of the wilder tech people think the chances of a GI being built within five to ten years is 80%-90%, respected industry insiders I’ve talked to put it at 10%-20%.

What’s more, I think even this is an overestimate, as I don’t think that GI is achievable, at least not in the next five to ten years. I don’t see any of these models reasoning at the level of humans for now.

Matthew Partridge: You’ve claimed the gross estimate of 1.5% of GDP might not take into account the way that AI could reduce human welfare by creating things that are undesirable.

Daron Acemoglu: Yes, that’s something I’m definitely worried about. I don’t know what the future will hold, but there is increasing evidence that Facebook and social media have had a lot of negative effects, even though they have been hugely profitable in terms of generating advertising revenue.

Matthew Partridge: Given that AI will affect some workers more than others, how do you think it will change inequality?

Daron Acemoglu: We need to distinguish between two types of inequality. One type, wealth inequality, is driven by people at the very top becoming extremely rich, and that is already happening due to the hype driving valuations of companies in a concentrated industry through the roof; witness Elon Musk’s fortune.

The second aspect, in terms of labour-income inequality, is harder to judge. There’s strong evidence that robots and automation during the 1990s led to massively disruptive distributive effects that saw the livelihoods of men who didn’t have a college education take a big hit.

However, a similarly visible big group of losers is less likely this time around. Firstly, the number of workers who will be affected are much less than during the turn of the millennium, although they will probably be affected a bit faster. What’s more, the tasks that AI is set to replace are much more geographically and occupationally dispersed, so you won’t have a specific group that gets decimated. While women may be slightly more affected than men, the difference won’t be huge.

Matthew Partridge: Do you think there’s a chance that governments could end up wasting large amounts of money by following Silicon Valley down the AI rabbit hole, through investing in data centres that don’t end up delivering anything?

Daron Acemoglu: While I don’t know the answer to that right now, what I can say is that the industry itself is wasting a lot of money. It has put close to a trillion dollars into the hardware, without seeing any of the breakthroughs that people were hoping for.

We also need to realise that many of these models are actually very cost- and energy-intensive, and are being used for frivolous activities in order to help firms build a base of consumers. So, even though most people have a calculator (or spreadsheet) that can multiply two numbers together, people are getting ChatGPT to do such a basic task, which wastes a lot of energy since it really isn’t designed for that. What’s more, if I’m worried about the idea of a tech oligarchy, I couldn’t say that it would be categorically wrong for the government to get involved in the industry.

However, if the government just blindly invests in things, or tries to be the innovator itself, that’s not going to work. I think the government needs to be a facilitator for more competition and perhaps support new things that are not being tried in the industry, such as the next DeepSeek. But you could end up with the government giving money to fly-by-night companies, or completely waste cash itself. Let’s face it, neither the US nor the UK governments’ record has been that inspiring of late.

Matthew Partridge: Do you think there is a parallel between AI and the internet revolution? You had a period of three to five years (between 1996 and 2001) where companies spent huge sums of money on business models that were either poor, or before their time. And then you had a period where no one wanted to talk about the internet – people only changed their minds after we started to see some real productivity gains a bit later. Could there be a repeat of this scenario with AI?

Daron Acemoglu: I think there are several very important parallels and some differences. For example, I think AI’s and the internet’s potential as social goods are comparable, and I also think that both of them can be used for good or ill. The internet has been used to offer myriad new services and products that nobody could have dreamed of in 1995 – but also to create new scams, new traps and horrible things for people to do on the internet.

I also think the AI industry needs to prove that there is a way to monetise this new technology, something the internet sector failed to do initially. And while I have every confidence that that’s feasible with AI, I don’t have confidence in OpenAI or the other current companies to do it. Still, I think the idea of a repeat of a crash on the scale of 1999-2002 is unlikely because so many firms are now invested in it, which will prevent investment from drying up.

Matthew Partridge: At present, there is a big dispute over the “use” of intellectual property to “train” the AI models, as shown by the controversy over the changes to British copyright law being proposed by the government, which some say will allow the tech companies to trample over the rights of the creative industry.

Daron Acemoglu: I absolutely agree that there’s going to be a huge battle, although I’d frame it more as one over data than about intellectual property since most of the plundering is taking place at the lower end of the creative spectrum. For now the tech companies are trying to use their political influence to allow them to keep taking this data, which is a bad idea since it’s in their long-term interest to create a framework where it can be bought and sold.

Daron Acemoglu is the Elizabeth and James Killian professor of economics at the Massachusetts Institute of Technology and faculty co-director of MIT’s Shaping the Future of Work Initiative. In 2024 he won the Nobel Prize for Economics for his work on how political and economic institutions affect a nation’s development.

This article was first published in MoneyWeek's magazine. Enjoy exclusive early access to news, opinion and analysis from our team of financial experts with a MoneyWeek subscription.

Get the latest financial news, insights and expert analysis from our award-winning MoneyWeek team, to help you understand what really matters when it comes to your finances.

-

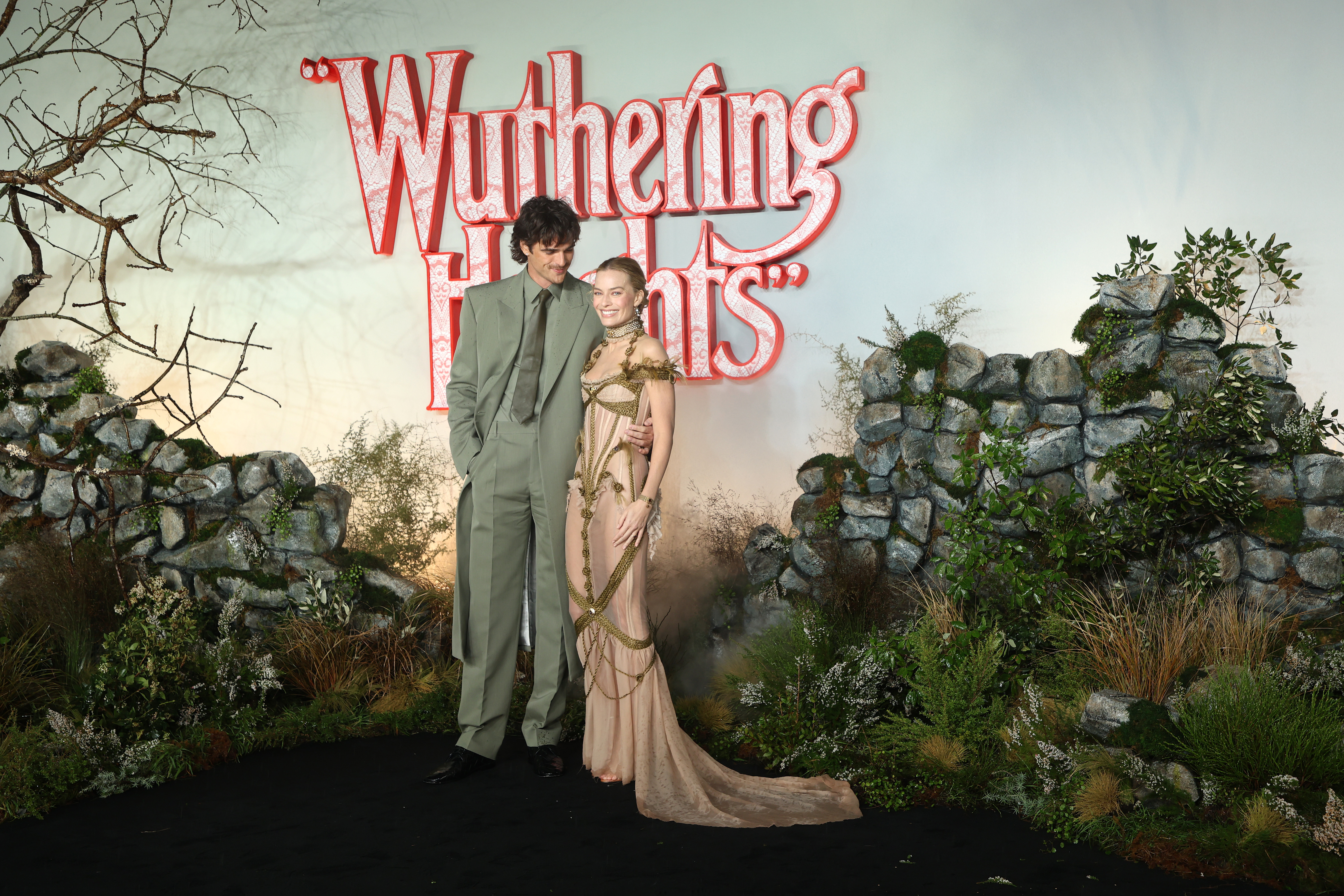

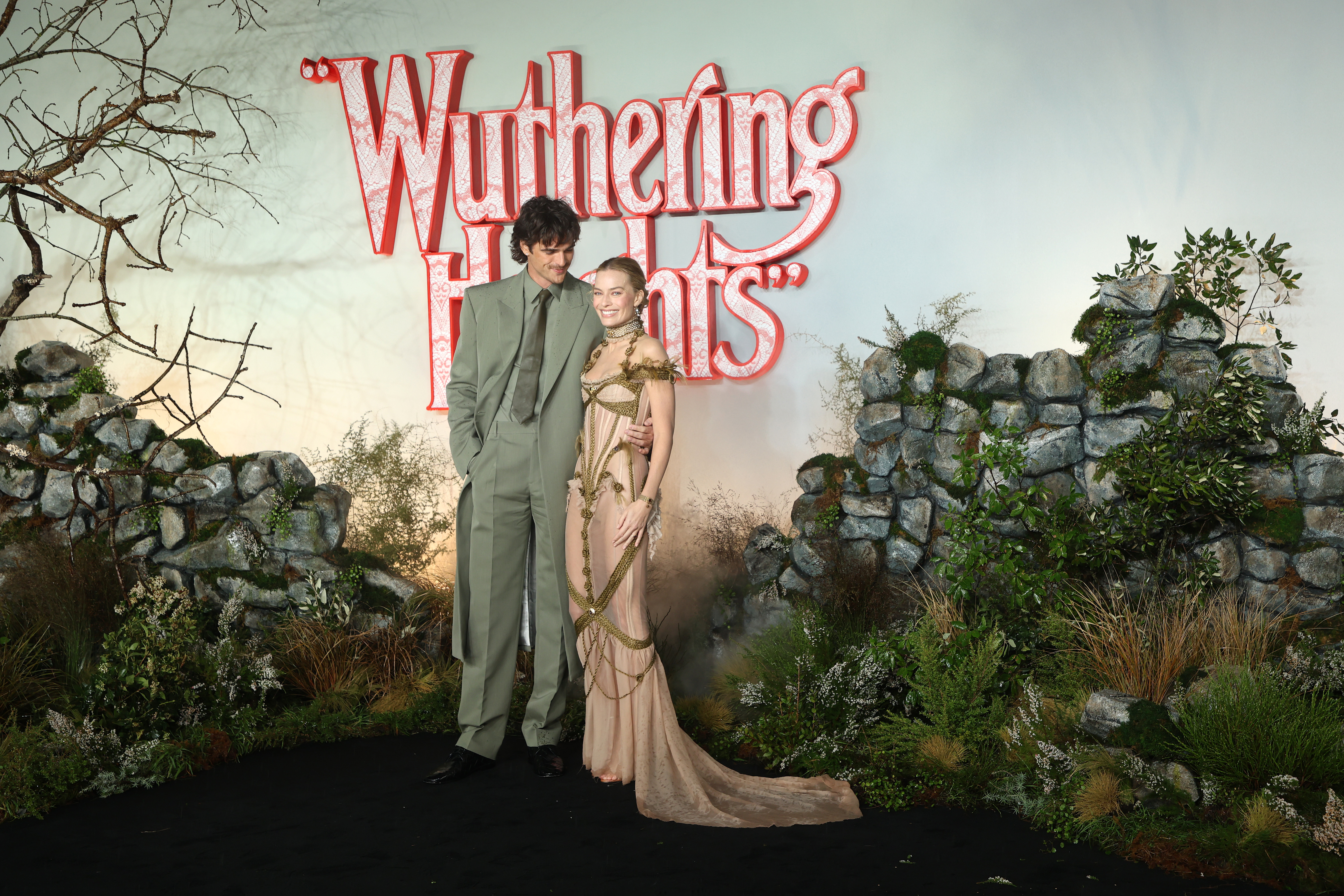

The rare books which are selling for thousands

The rare books which are selling for thousandsRare books have been given a boost by the film Wuthering Heights. So how much are they really selling for?

-

Pensions vs savings accounts: which is better for building wealth?

Pensions vs savings accounts: which is better for building wealth?Savings accounts with inflation-beating interest rates are a safe place to grow your money, but could you get bigger gains by putting your cash into a pension?

-

The rare books which are selling for thousands

The rare books which are selling for thousandsRare books have been given a boost by the film Wuthering Heights. So how much are they really selling for?

-

What is physical AI, and how can you invest in it?

What is physical AI, and how can you invest in it?Artificial intelligence is increasingly taking physical form and could completely transform how we live. How can investors gain exposure?

-

How to invest as the shine wears off consumer brands

How to invest as the shine wears off consumer brandsConsumer brands no longer impress with their labels. Customers just want what works at a bargain price. That’s a problem for the industry giants, says Jamie Ward

-

In defence of GDP, the much-maligned measure of growth

In defence of GDP, the much-maligned measure of growthGDP doesn’t measure what we should care about, say critics. Is that true?

-

A niche way to diversify your exposure to the AI boom

A niche way to diversify your exposure to the AI boomThe AI boom is still dominating markets, but specialist strategies can help diversify your risks

-

New PM Sanae Takaichi has a mandate and a plan to boost Japan's economy

New PM Sanae Takaichi has a mandate and a plan to boost Japan's economyOpinion Markets applauded new prime minister Sanae Takaichi’s victory – and Japan's economy and stockmarket have further to climb, says Merryn Somerset Webb

-

Early signs of the AI apocalypse?

Early signs of the AI apocalypse?Uncertainty is rife as investors question what the impact of AI will be.

-

Reach for the stars to boost Britain's space industry

Reach for the stars to boost Britain's space industryopinion We can’t afford to neglect Britain's space industry. Unfortunately, the government is taking completely the wrong approach, says Matthew Lynn