Art vs AI: artists’ uprising takes on the bots

Artificial Intelligence (AI) performs impressively, but much of it is based on human work that was taken without payment. The government thinks this is fine. Copyright holders beg to differ

Get the latest financial news, insights and expert analysis from our award-winning MoneyWeek team, to help you understand what really matters when it comes to your finances.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Generative artificial intelligence (AI) is already capable of impressive feats, but it requires a constant diet of human-generated content to grow its capabilities. Much of that content has been scraped from the web without the consent (or indeed knowledge) of its original creators.

Developers typically argue that “fair use” exemptions (allowing the use of copyrighted material, typically excerpts under specific conditions) should apply to what they do. Publishers, music companies and authors bitterly disagree – while legal systems and governments are racing to catch up. For the UK government, the dilemma is that it desperately wants this country to attract AI companies to scale up and drive economic growth. But it also needs to protect Britain’s world-class and highly tax-generative creative industries.

What is the government planning on copyright and AI rules?

Nothing is certain yet, but a consultation on its draft proposals closed on 25 February, amid a chorus of disapproval from many of the UK’s leading creative artists. Until now, the UK has had one of the strongest copyright regimes in the world.

Try 6 free issues of MoneyWeek today

Get unparalleled financial insight, analysis and expert opinion you can profit from.

Sign up to Money Morning

Don't miss the latest investment and personal finances news, market analysis, plus money-saving tips with our free twice-daily newsletter

Don't miss the latest investment and personal finances news, market analysis, plus money-saving tips with our free twice-daily newsletter

Essentially, the government is proposing exemptions from existing copyright law for AI web-crawlers, where the onus would be on a rights holder to opt out of their content being taken free of charge and for them to trace how it is being used. That would be a big and radical change, and it’s got whole industries in the creative sector worried.

Would that break international law?

It’s not clear. Some lawyers argue that the government’s proposals would very likely breach an international treaty to which the UK is a party, namely the Berne Convention. However, Peter Kyle, the technology minister overseeing the planned legislation, insists it will meet all international obligations. According to Kyle, the government “won’t legislate until the tech companies can prove that the technology can deliver the transparency that they have said that they can, and that we will find ways for the creative arts industry to make money in the digital age”.

Artists are unconvinced. Last month more than 1,000 artists, including Kate Bush, Annie Lennox and Cat Stevens, backed the release of a silent album, titled Is This What We Want?, containing nothing but background noise.

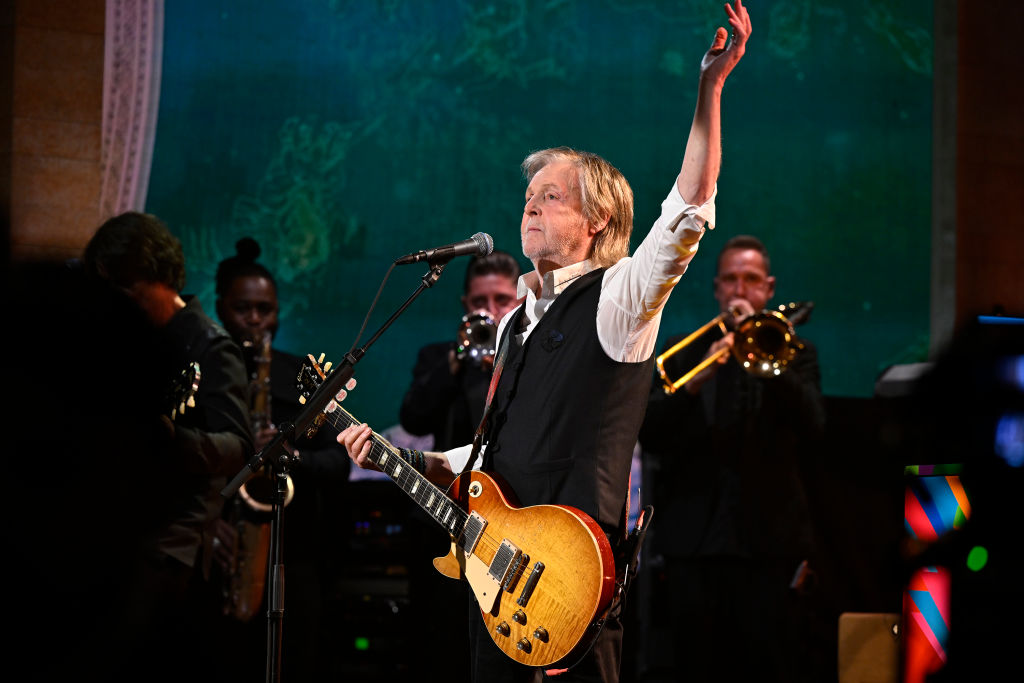

Separately, in a letter to The Times, three musical knights of the realm – Andrew Lloyd Webber, Elton John and Paul McCartney – warned against the proposals, arguing that the current copyright system “is one of the main reasons why rights holders work in Britain”.

What’s their case?

The creative artists say the government’s proposals will “smash a hole in the moral right of creators to present their work” and jeopardise a £126 billion industry that employs 2.4 million people in the UK.

They say that Britain’s creative industries “want to play their part in the AI revolution”, but they need to do so from a firm intellectual-property base. If not, Britain will lose out on its best growth opportunity. There is “no moral or economic argument for stealing our copyright”, the artists say. “Taking it away will devastate the industry and steal the future of the next generation.” In the US, the Authors Guild and 17 individual authors, including Jodi Picoult and Jonathan Franzen, are suing OpenAI and Microsoft for copyright infringement, alleging “systematic theft on a mass scale”.

What are other states doing?

In the US, the Trump administration repealed Biden-era regulation, adopting a more light-touch approach to foster growth and innovation. Indeed, part of what’s driving the British government line is its wish to position the UK as an AI-friendly powerhouse, aligned with the US on tech policy.

In the EU, an Artificial Intelligence Act passed last year obliges tech firms to comply with the EU’s strict 2019 copyright law. That law includes an exemption for “text and data mining”, but it was framed before the emergence of mass-market generative AI, and was not intended to let the world’s largest companies harvest vast amounts of intellectual property. As a result, there are legal disputes between creators/publishers and AI firms in many European countries. Everywhere, the lack of regulatory clarity – and obvious potential for commercial disputes in a fast-moving sector – means that courts are being kept busy. In the US, several high-profile cases filed by publishers against AI companies are working their way through the US legal system. Here, Getty Images is pursuing a closely watched case against AI image-generation company Stability AI.

How will all this play out?

“These disputes are a classic example of what happens when new technologies outpace laws written for an earlier era,” says John Thornhill in the Financial Times. But the overriding principle – that no one should profit from another’s intellectual property without consent – should remain “inviolable”.

Legislation will be part of this emerging landscape, but so will market-based solutions and innovations aimed at facilitating licensing deals that compensate content creators for letting AI companies scrape their property. Already, high-profile publishers have struck ad hoc deals with AI companies. Axel Springer, News Corp and the FT have signed agreements with OpenAI, while Agence France-Presse (AFP) has partnered with Mistral.

Meanwhile, several start-ups are experimenting with new economic models. Human Native, for example, is creating a “two-sided marketplace allowing AI creators to license data from content creators”. TollBit enables AI bots and data scrapers to pay websites directly for their content. And ProRata is developing an “answer engine” that would pay a share of an AI company’s revenue to content creators whenever their work appeared in its responses. Nascent market mechanisms are developing that “could enable mutually beneficial solutions”.

This article was first published in MoneyWeek's magazine. Enjoy exclusive early access to news, opinion and analysis from our team of financial experts with a MoneyWeek subscription.

Get the latest financial news, insights and expert analysis from our award-winning MoneyWeek team, to help you understand what really matters when it comes to your finances.

-

What do rising oil prices mean for you?

What do rising oil prices mean for you?As conflict in the Middle East sparks an increase in the price of oil, will you see petrol and energy bills go up?

-

Rachel Reeves's Spring Statement – live analysis and commentary

Rachel Reeves's Spring Statement – live analysis and commentaryChancellor Rachel Reeves will deliver her Spring Statement on 3 March. What can we expect in the speech?

-

Three Indian stocks poised to profit

Three Indian stocks poised to profitIndian stocks are making waves. Here, professional investor Gaurav Narain of the India Capital Growth Fund highlights three of his favourites

-

UK small-cap stocks ‘are ready to run’

UK small-cap stocks ‘are ready to run’Opinion UK small-cap stocks could be set for a multi-year bull market, with recent strong performance outstripping the large-cap indices

-

Hints of a private credit crisis rattle investors

Hints of a private credit crisis rattle investorsThere are similarities to 2007 in private credit. Investors shouldn’t panic, but they should be alert to the possibility of a crash.

-

Investing in Taiwan: profit from the rise of Asia’s Silicon Valley

Investing in Taiwan: profit from the rise of Asia’s Silicon ValleyTaiwan has become a technology manufacturing powerhouse. Smart investors should buy in now, says Matthew Partridge

-

‘Why you should mix bitcoin and gold’

‘Why you should mix bitcoin and gold’Opinion Bitcoin and gold are both monetary assets and tend to move in opposite directions. Here's why you should hold both

-

Invest in the beauty industry as it takes on a new look

Invest in the beauty industry as it takes on a new lookThe beauty industry is proving resilient in troubled times, helped by its ability to shape new trends, says Maryam Cockar

-

Should you invest in energy provider SSE?

Should you invest in energy provider SSE?Energy provider SSE is going for growth and looks reasonably valued. Should you invest?

-

Has the market misjudged Relx?

Has the market misjudged Relx?Relx shares fell on fears that AI was about to eat its lunch, but the firm remains well placed to thrive